How AI image tools can be tricked into making political propaganda

Original Article Summary

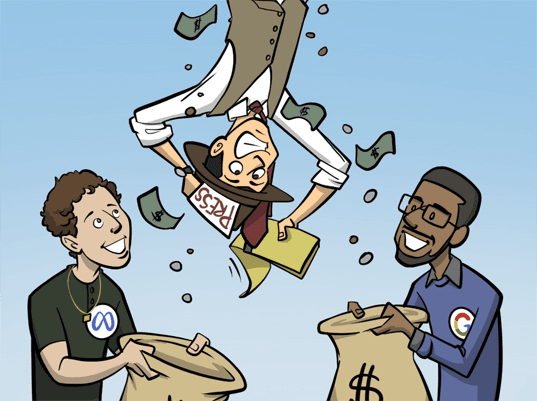

A single image can shift public opinion faster than a long post. Text to image systems can be pushed to create misleading political visuals, even when safety filters are in place, according to a new study. The researchers examined whether commercial text to i…

Read full article at Help Net Security✨Our Analysis

HelpNetSecurity's report on a new study revealing AI image tools can be tricked into making political propaganda, even with safety filters in place, highlights the potential for manipulated visuals to spread misinformation. This means that website owners, particularly those in the news, media, and political sectors, need to be vigilant about the AI-generated images they encounter and potentially host on their platforms. The study's findings suggest that AI image tools can be exploited to create misleading political visuals, which can then be disseminated through websites, social media, and other online channels, potentially influencing public opinion and undermining trust in online information. To mitigate these risks, website owners can take several steps: first, implement robust AI bot tracking to monitor and detect potential attempts to upload or generate misleading AI-created images; second, regularly review and update their llms.txt files to ensure they are blocking known sources of manipulated political propaganda; and third, consider implementing additional safety filters or fact-checking measures to verify the authenticity of AI-generated images before they are published on their websites.

Related Topics

Track AI Bots on Your Website

See which AI crawlers like ChatGPT, Claude, and Gemini are visiting your site. Get real-time analytics and actionable insights.

Start Tracking Free →