Creative Commons announces tentative support for AI 'pay-to-crawl' systems | TechCrunch

Original Article Summary

The organization offered support for this idea of an AI marketplace, and suggested several guiding principles.

Read full article at TechCrunch✨Our Analysis

Creative Commons' announcement of tentative support for AI 'pay-to-crawl' systems, as reported by TechCrunch, marks a significant development in the AI marketplace. This support suggests that Creative Commons is open to exploring new models for AI systems to access and utilize online content, with the possibility of compensating creators for the use of their work. For website owners, this means that they may soon have more control over how AI bots interact with their content, potentially generating revenue from AI-driven traffic. If 'pay-to-crawl' systems become widely adopted, website owners may need to reconsider their content policies and decide whether to opt-in to these systems, potentially affecting their llms.txt files and AI bot tracking strategies. To prepare for this shift, website owners should consider reviewing their current content licenses and terms of use, ensuring they are compatible with potential 'pay-to-crawl' systems. Additionally, they should monitor updates to Creative Commons' guiding principles and adjust their llms.txt files accordingly to reflect their desired level of AI bot access. Lastly, website owners should explore tools and platforms that can help them track and manage AI bot traffic, ensuring they can make informed decisions about their content and revenue streams.

Related Topics

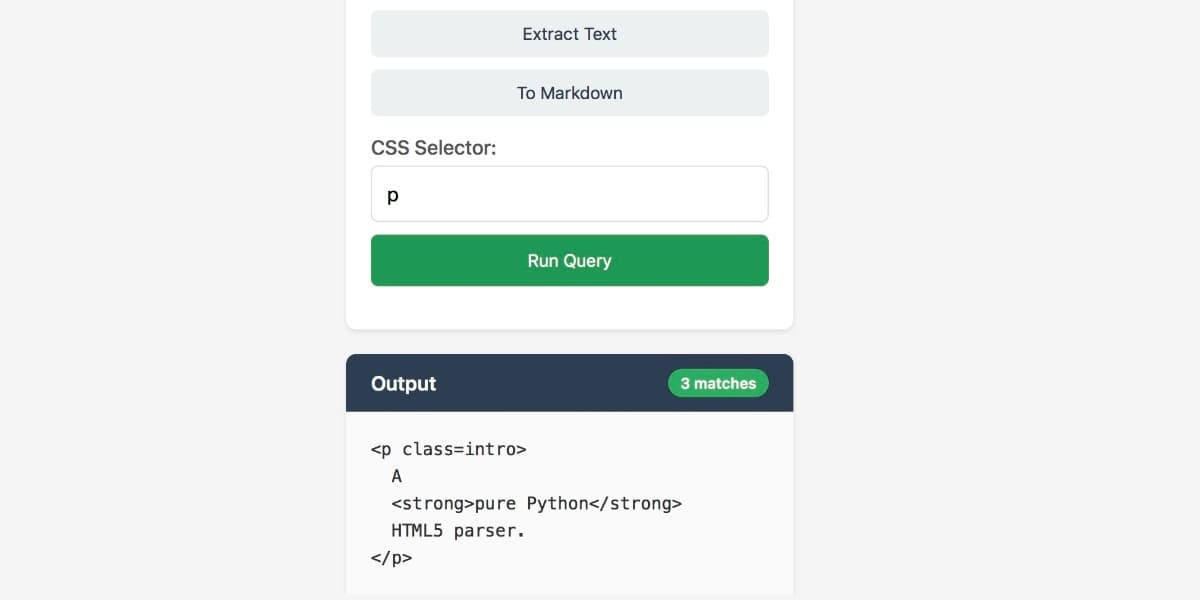

Track AI Bots on Your Website

See which AI crawlers like ChatGPT, Claude, and Gemini are visiting your site. Get real-time analytics and actionable insights.

Start Tracking Free →Related Articles

Cloudflare report reveals global internet internet traffic grew 19% in 2025 - but a lot of it was just bots

12/16/2025

Generative AI makes PR a key business priority in 2026: 35 PR and marketing predictions - Sword and the Script

12/16/2025

JustHTML is a fascinating example of vibe engineering in action

12/16/2025